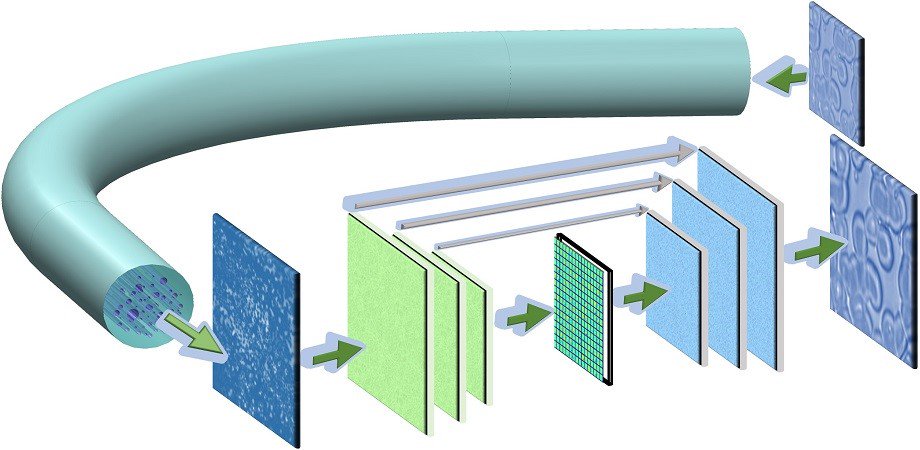

Image recording with white light through GALOF Optical fibers with neuron network image reconstruction

The KaraSpace optical fiber imaging system has just been confirmed an other time through a publication from a joined study by the University of Central Florida, the College of Optics and Photonics in Orlando Florida and the Changchun Institute of Optics in China.

Publication in the Advanced Photonics Journal on 11th Nov. 2019

This is the billion dollar hint for investors of the KaraSpace development companies, as it is difficult to believe the incredible claim of the KaraSpace Labs to be able to send complete medium high resolution images through a single multi mode optical fiber (MMF) in both directions. This is now nicely confirmed in a single independent scientific publication.

The research publication is for the application of medical endoscopes to make pictures through a single MMF optical fiber of a living cell. KaraSpace uses the same concepts for mainstream AR Glasses. The whole point is to make AR-Glasses as small as frame less style minimalistic spectacles. This leaves only thin optical fibers to be placed in the front part of the human face and to be hidden in the frame and inside the glass of the spectacles.

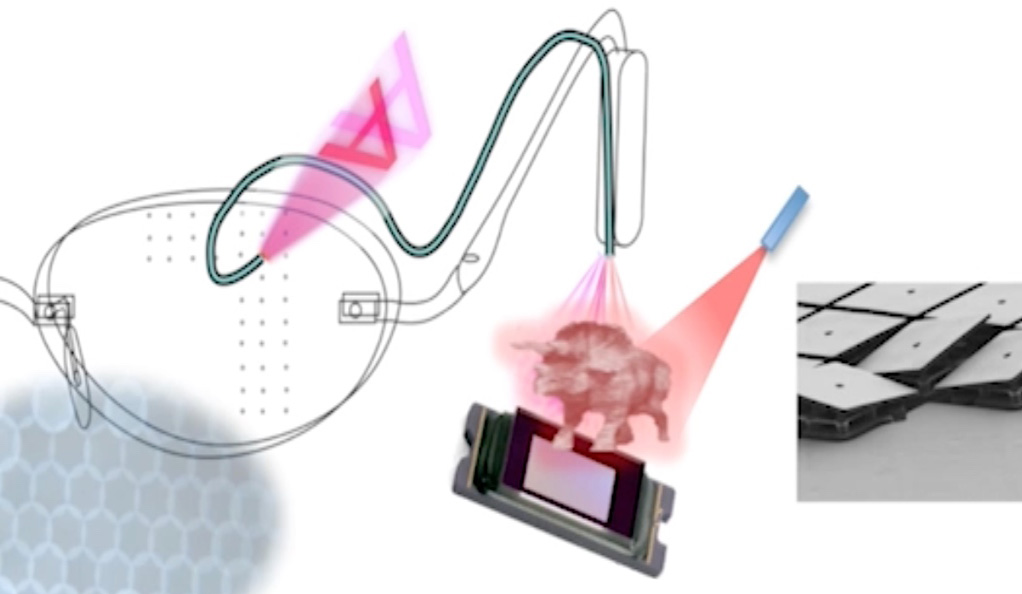

Image projection with color separated RGB light through MMF Optical fibers and by fast switching DMD SLM chips

In the one direction the optical fibers must be used for recording the surrounding and for tracking the eye movement (eye tracking). The challenge is, that for this recording, the light entering the optical fiber is only white light. For reconstructing the image at the fare side of the fiber, the exiting white light pattern is transformed through a deep learning neuronal network. Also a special glass–air Anderson localizing optical fiber GALOF is used.

Into the other direction the optical fibers must be used for projecting multiple images of medium high resolution, to form a whole grid of images which build up the total image (integral imaging). For this a multi mode optical fiber (MMF) is used. For each of this projections a single color must be used to create the correct image on the other side. The challenge is here, that a normal image map is not sufficient. Also the phases of the wavefront must be correct.

For this, a fast switching DMD Micro Mirror Chip is used as Spacial Light Modulator (SLM) to create this complex wavefront. The RGB colors and the sub-images are then displayed in fast sequence.

The scientific publication describes this capabilities in the context of an endoscope and specifies the performance of this systems. As it focuses on the method of using a neuronal network for learning and applying the transformation of the wavefront, it describes the transformation via an SLM as problematic for white light. But for the projection of the images, the light must be separated in RGB anyways. Also the speed of the SLM is said to be very slow. But this is only true for LCD SLM. For DMD SLMs the speed is among the fastest with over 32000 Hz for binary images. This is exactly what is needed for AR Glass projections and the integral imaging system.

Projection and recording fibers hidden in the spectacles

The publication means for the KaraSpace System, that at least the eye tracking can be easily performed by the described GALOF optical fiber in combination with the DCNN deep convolutional neural networks as it has to deal with normal white light.

The discussion and conclusion chapter summarizes the MMF Fiber Projector properties as follows:

The mode density of a MMF is reported to be around 1 mode per µm², so when using a fiber of 200µm radius the image resolution can be compared with a 354 x 354 pixel image in one shot.

In MMF fibers the whole mode structure can be changed and rotated as a whole, so that through multiple shots, the resolution can be multiplied by each additional sequence, for example to the equivalent of 708 x 708 pixel after 4 shots in a sequences. This increase in resolution is used in the KaraSpace System to increase the resolution only in the area where the user is actually looking at. (hardware foveated rendering)

The assessment of the scientific report corresponds in general to the findings of the KaraSpace Lab. But the potential for resolution increase are still very high. So an integral imaging grid of 15 x 10 such sub-screens result in a virtual resolution of at least a 15K monitor for each eye.

The mode density of a GALOF fiber is reported to be around 10 modes per µm², so when using a thinner fiber of 100µm radius for the camera, the image resolution can be compared with a 560 x 560 pixel image, but with this type of optical fiber the mode system can not be rotated to gain more resolution.

The dependency of the imaging system on the bending of the fibers is not critical in the AR Glasses, as the position is fixed during projection. When the glasses are folded, the fibers are bended, but they always return to the original position when unfolded.

The dependency on the temperature on the imaging system is also no problem as it can be corrected with temperature sensors and software adjustments.

The apparatus for measuring phase phase map is costly, but is only needed for calibration of the system at the factory site.

The KaraSpace integral fiber optical design for frame-less minimalistic AR spectacles with uncompromised resolution relies on many more optical subsystems but which are alle proven to work, just like the optical fiber projector.

The cloaking tubes using transformational optics, the MEMS technology to produce fast optical switches and the nano scale 3d printing of optical lenses, and so on…

All this information makes the KaraSpace optical system hopefully more comprehensible, to understand that it is the only optical technology platform today that can be developed further to build mainstream AR glasses in the next decade.